Background on the Task

Object Detection is the process of identifying things from an image or a video. It can be used tin many practical applications such as security, face detection, vehicle detection etc. We try to detect objects in the given video.

In this day and age CCTV video monitoring has become a tedious task and is very time taking and very error-prone. This project of ours will take on that problem as we try to solve the ever existing problem of CCTV monitoring, mainly vehicle and human detection and frequency assertion. Our project focuses on the problem at hand “counting vehicle trips around our campus”.

The main problem is skeptical as it dosent have a fixed requirement as the trips are variable and are dependant on the climate and other foreseen and unforeseen conditions.

Introduction

An object is an identifiable portion of an image that can be interpreted as a single unit(according to computer vision).We can identify a region in entire pixel values. Object detection is the process of finding instances of real-world objects, such as faces, vehicles, buildings, etc. Object detection plays a key role in Artificial intelligence and Machine learning applications and has a wide range of use cases.

Problem Statement

The main objective of our project is to detect the various objects present in the video and then secondarily count number of trips the tanker can take in the given CCTV footage from RGUKT Nuzvid(my college campus).

Scope of the project

This project takes on the problem of tedious video surveillance and can be used to keep fraud and cheating in check. This project has many real world applications as it can be used to detect and count trips of a commercial vehicle and tallied with the times it claims to have taken the trips, this way fraud becomes scarce and can also be used for crowd control in busy areas.

Existing Solutions

“A fast object detection algorithm using motion-based region of interest determination”

-A. Anbu , G. Agarwal , G. Srivastava

The above paper presents a fast algorithm for achieving motion-based object detection in an image sequence. While in most existing object-detection algorithms, segmentation of the image is done as the first step followed by grouping of segments, the proposed algorithm first uses motion information to identify what they call a region of interest. Since the region of interest is always smaller than the image, the proposed algorithm is 2 to 4 times faster than every existing algorithm for object detection. In terms of the accuracy with which desired object is detected, the performance of our algorithm is comparable to the existing algorithms.

Although the above algorithm did the job that it was intended to do, it was slower and lacked real world application potential. In 2015, Joseph Redmon, Santosh Divvala, Ross Girshick and Ali Farhadi published a paper on YOLO (You only look once) :Unified, Real time object detection. This revolutionized how we did object detection. This approach generally frames the object detection problem as a regression problem to spatially seperated bounding boxes and associated class probabilities. A single neural network direclty predicts in a single evalualtion, hence the name YOLO.

Advantages of YOLO

Speed is the major advantage of YOLO, as it works at 45fps, which is better than real-time.There is also a faster version which works at 155 fps but is less accurate and generally used for small tasks. The best part, it is completely open source and can be used to improvise and mould it according to your requirements.

Limitations of YOLO

YOLO imposes strong spatial constraints on bounding box predictions since each grid cell only predicts two boxes and can only have one class. This spatial constraint limits the number of nearby objects that this model can predict. This model struggles with small objects that appear in groups, such as flocks of birds. Since this model learns to predict bounding boxes from data, it struggles to generalize to objects in new or unusual aspect ratios or configurations.

YOLO workflow

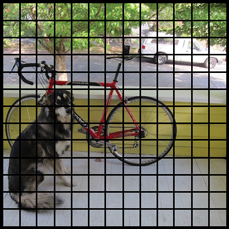

YOLO divides up the image into a grid of 13 x 13 cells

Each of these cells is responsible for predicting 5 bounding boxes. A bounding box describes the rectangle that encloses an object.

YOLO also outputs a confidence score that tells us how certain it is that the predicted bounding box actually encloses some object. This score doesn’t say anything about what kind of object is in the box, just if the shape of the box is any good.

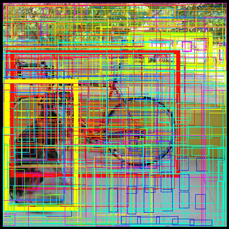

The predicted bounding boxes may look something like the following (the higher the confidence score, the fatter the box is drawn)

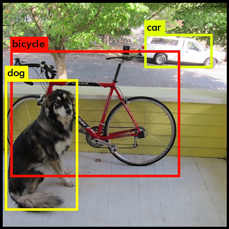

For each bounding box, the cell also predicts a class. This works just like a classifier. It gives a probability distribution over all the possible classes.The confidence score for the bounding box and the class prediction are combined into one final score that tells us the probability that this bounding box contains a specific type of object. For example, the big fat yellow box on the left is 85% sure it contains the object “dog”.

Since there are 13×13 = 169 grid cells and each cell predicts 5 bounding boxes, we end up with 845 bounding boxes in total. It turns out that most of these boxes will have very low confidence scores, so we only keep the boxes whose final score is 30% or more (you can change this threshold depending on how accurate you want the detector to be).

The final prediction is then:

From the 845 total bounding boxes we only kept these three because they gave the best results. But note that even though there were 845 separate predictions, they were all made at the same time — the neural network just ran once. And that’s why YOLO is so powerful and fast.

Transfer Learning

Transfer learning is a machine learning method where a model developed for a task is reused as the starting point for a model on a second task.Transfer Learning differs from traditional Machine Learning in that it is the use of pre-trained models that have been used for another task to jump start the development process on a new task or problem.The benefits of Transfer Learning are that it can speed up the time it takes to develop and train a model by reusing these pieces or modules of already developed models. This helps speed up the model training process and accelerate results.

How to use Transfer Learning?

-

Select Source Model: A pre-trained source model is chosen from available models. Many research institutions release models on large and challenging datasets that may be included in the pool of candidate models from which to choose from.

-

Reuse Model: The model pre-trained model can then be used as the starting point for a model on the second task of interest. This may involve using all or parts of the model, depending on the modeling technique used.

-

Tune Model: Optionally the model may need to be adapted or refined on the input-output pair data available for the task of interest.

Why is it used?

Using Transfer Learning has several benefits that we will discuss in this section. The main advantages are basically that you save training time, that your Neural Network performs better in most cases and that you don’t need a lot of data.

Usually ,you need a lot of data to train a Neural Network from Scratch but you don’t always have access to enough data. That is where Transfer Learning comes into play because with it you can build a solid machine learning model with comparatively little training data because the model is already pre-trained. This is especially valuable in Natural Language Processing.Because there is mostly expert knowledge required to create large labeled data sets.Therefore you also save a lot of training time, because it can sometimes take days or even weeks to train a deep Neural Network from Scratch on a complete task.

This is very useful since most real-world problems typically do not have millions of labeled data points to train such complex models.

Procedure/Approach

OK! That’s enough theory, let’s get into how this project was done.

Traditional Approach using OpenCV

- We clean the data first, in the preprocessing stage, we encode the raw footage and change the video dimensions in our required specifications.

- Each image is processed from the video and then the blobs are detected.

- The blob will be identified by subtracting the present image with previous image as reference, this process is called as background subtraction.

Reference Image Target image

Background Subtracted Result

- Now the blobs can be detected in our region of interest (ROI). These blobs constitute the objects in the image. Mainly used for tracking and deteting purposes in videos.

Deep Learning Approach using YoLo V3

-

In the aforementioned chapter, we have seen Transfer Learning, which is quite useful and can at times improve the learning. We used the same for our problem statement.

-

The preprocessing step is pretty much similar to the OpenCV aproach, as we need our videos to be of adequate quality and of low size.

-

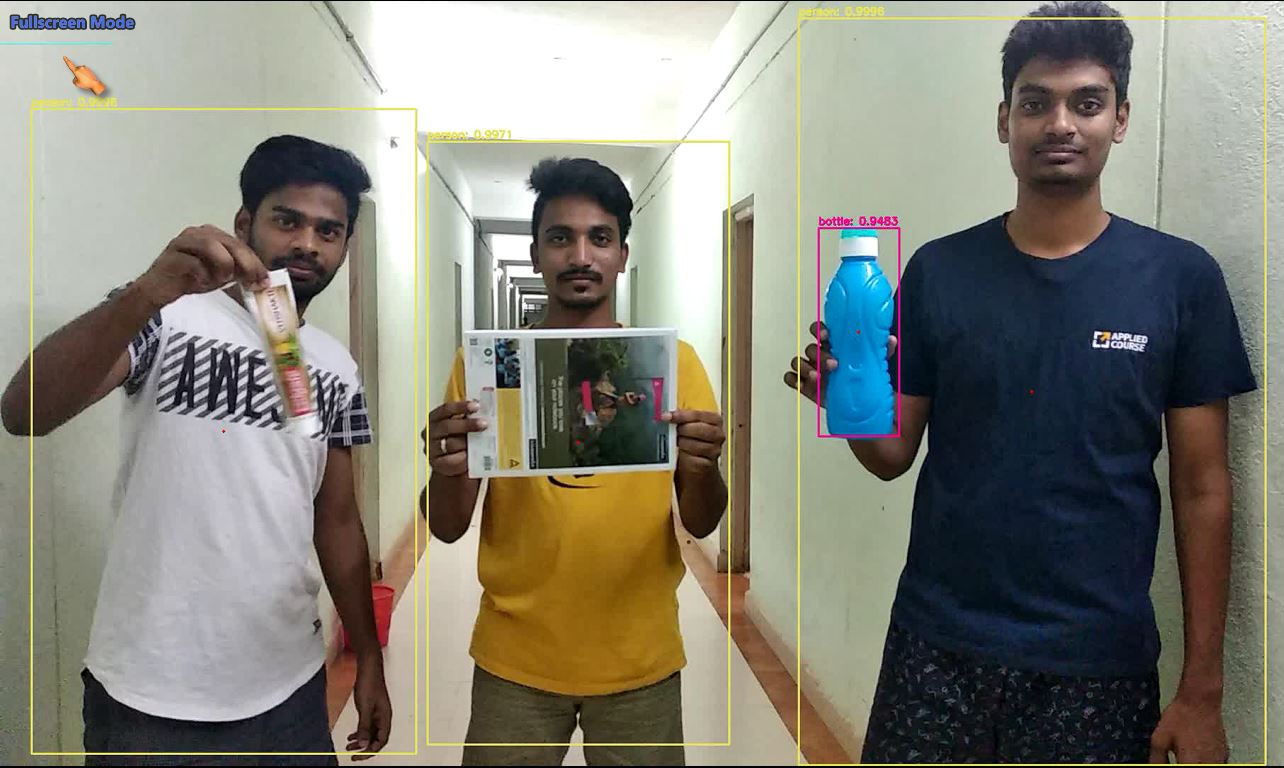

We used the COCO dataset which contains 80 classes of objects and took a pre-trained version on it, the algorithm used to train it was YOLO.

-

We use all the frames which constitutes the video and apply one-shot prediction on each frame (takes around 4 sec) and then sew it back together, this is done with the openCV function videoCapture and its write feature.

-

So far Object detection is successful, but applying it to a real-world problem is the real issue, so use now have to count the number of times the tanker crosses the ROI line.

-

We first calculate centroid of every bounding box and then increment the count each time the centroid passes the ROI line.

Tools and Technologies used

Python

An Object oriented programming language widely used and is very popular nowadays. This is the most popular language used for AI/ML because, most researchers and developers use this as a base for the tools they develop, for example tensorflow, keras, pytorch etc.

Computer Vision

-

Computer vision generally deals with pictures and videos

-

The basic idea is giving Vision/Sight to a computer through webcan , camera or video/image files.

-

This a very versatile libraray supporterd for many different languages and is used for mainly image processing tasks and in video surveillances.

Background Subtraction

Subtracting an image with reference to an image to get its foreground is the basic idea of background subtraction. Background subtraction is a widely used approach for detecting moving objects in videos from static cameras. The rationale in the approach is that of detecting the moving objects from the difference between the current frame and a reference frame, often called “background image”, or “background model”. Background subtraction is mostly done if the image in question is a part of a video stream. Background subtraction provides important cues for numerous applications in computer vision, for example surveillance tracking or human poses estimation.

Google Colaboratory

This is a free training ground for data scientists from Google, this is equipped with a tesla K80 GPU and a free TPU is also provided after the new update.

Tensorflow(Keras)

An open source machine learning library for research and production.

Challenges

- The difficulty arises when the type of the object to be detected and counting is different than that of the already available classes of objects.

- There can be a problem when the truck or the tanker both are in the same frame and there is a overlap.

- There is also the issue of how the camera is fitted and how the video feed is available to us, the perspective matters a lot as the ROI line depends on it.

- As the OpenCV approach dosen’t involve training the model, we can get the result almost immidiately, but cannot guarentee the efficiency and accuracy though. The Deep Learning approach takes a considerable amount of time to train but it is much more accurate, its even better than realtime using YOLO, the only hitch would be hardware limitations to use transfer learning

Output/Result

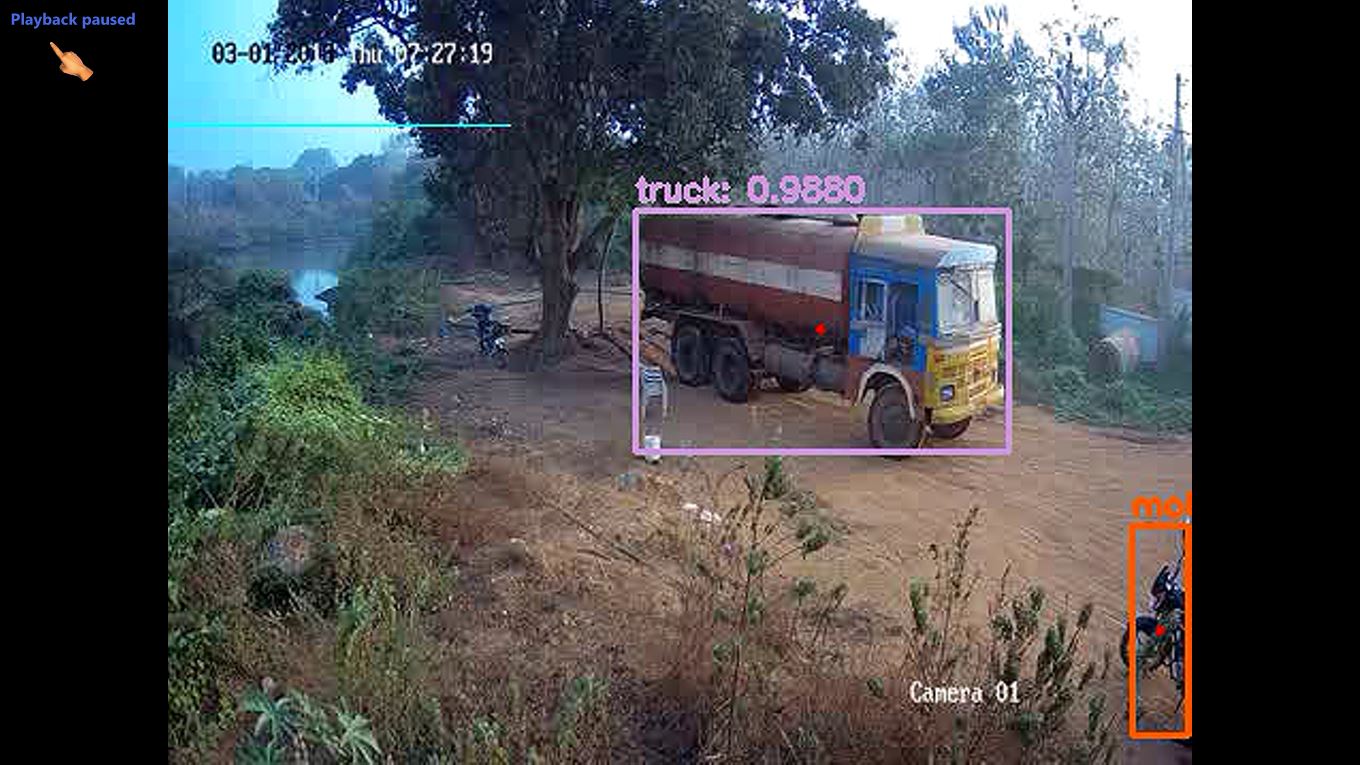

The object detection output of the pre-trained YoLo V3 model :

The Vehicle detection in the CCTV Footage :

The below two images show the count increment as the truck passes the ROI line :

References

-

Haskell. Transfer of learning : cognition, instruction, and reasoning. Academic Press,San Diego Calif 2001

-

N. Perkins and G. Salomon. Transfer of learning. International Encyclopedia of Education (2nd ed.), 1992

-

A. Anbu, G. Agarwal and G. Srivastava, “A fast object detection algorithm using motion-based region-of-interest determination,” 2002 14th International Conference on Digital Signal Processing Proceedings. DSP 2002 (Cat. No.02TH8628), Santorini, Greece, 2002, pp. 1105-1108 vol.2.

-

You Only Look Once: Unified, Real-Time Object Detection, Joseph Redmon, Santosh Divvala, Ross Girshick, Ali Farhadi